Following on first programmatic article in this miniseries dedicated to optical metadata, we’d like to take you on a detailed dive into those few days dedicated to harvesting optical metadata.

In many cases of conventional production, it seems obvious to us that the presence of optical metadata associated with dailies would enable a more efficient approach to post-production, for corrections to distortion and vignetting, to stabilize a shot, to facilitate the integration of plates in the case of green and blue backgrounds… When in the case of virtual production, optical metadata is a necessity !

It is undoubtedly the advent of virtual production that has prompted several manufacturers to speed up the development of optical metadata. And not only lens manufacturers are concerned ! After all, metadata goes through cameras and other accessories. So we’ve taken advantage of our network of UCO partners to tackle this sprawling subject. Thank you to Cooke, Zeiss, Arri, Sony, Blackmagic and all the rental companies that welcomed us for this challenge.

For the first session of our study, at PhotoCineRent, we’re putting to the test the protocol that seemed relevant to us. This is a somewhat experimental protocol, in the sense that to our knowledge there is no precedent for it, as it may be the case for comparisons of the visual rendering of optics or the way digital sensors handle high and low light.

In fact, our study about optical metadata is limited to considering the capabilities and data transmission flow of optics, and not their aesthetic qualities and specificities. A kind of new exercise for us !

A protocol

Of course, we were also interested in how to transmit metadata from the shoot to our post-production colleagues.

We approached the Compagnie Générale des Effets Visuels (thanks to Françoise Noyon, Pierre Billet and Vanessa Lafaille), through the intermediary of the CST, which we associate with our work, so that they could share their experience on the subject of our study and establish a viable protocol, i.e. one that corresponds to concrete practical cases.

We start from the presupposition that, in theory, each shot should have 7 track points in every image. This is the minimum number needed to calculate the position in space of the virtual camera reconstructed using 3D tracking. We therefore manufacture track targets to impose this minimum.

As regards fixed focal lengths, we don’t think it’s necessary to fit all the lenses in the series, but rather one or two short ones, which are more prone to distortion, and a 32mm, 35mm or 50mm reference lens (depending on the optical series and sensor size). As far as zooms are concerned, priority will be given to short zooms (assuming that the long zooms in the series have the same electronic specificities as the short zooms).

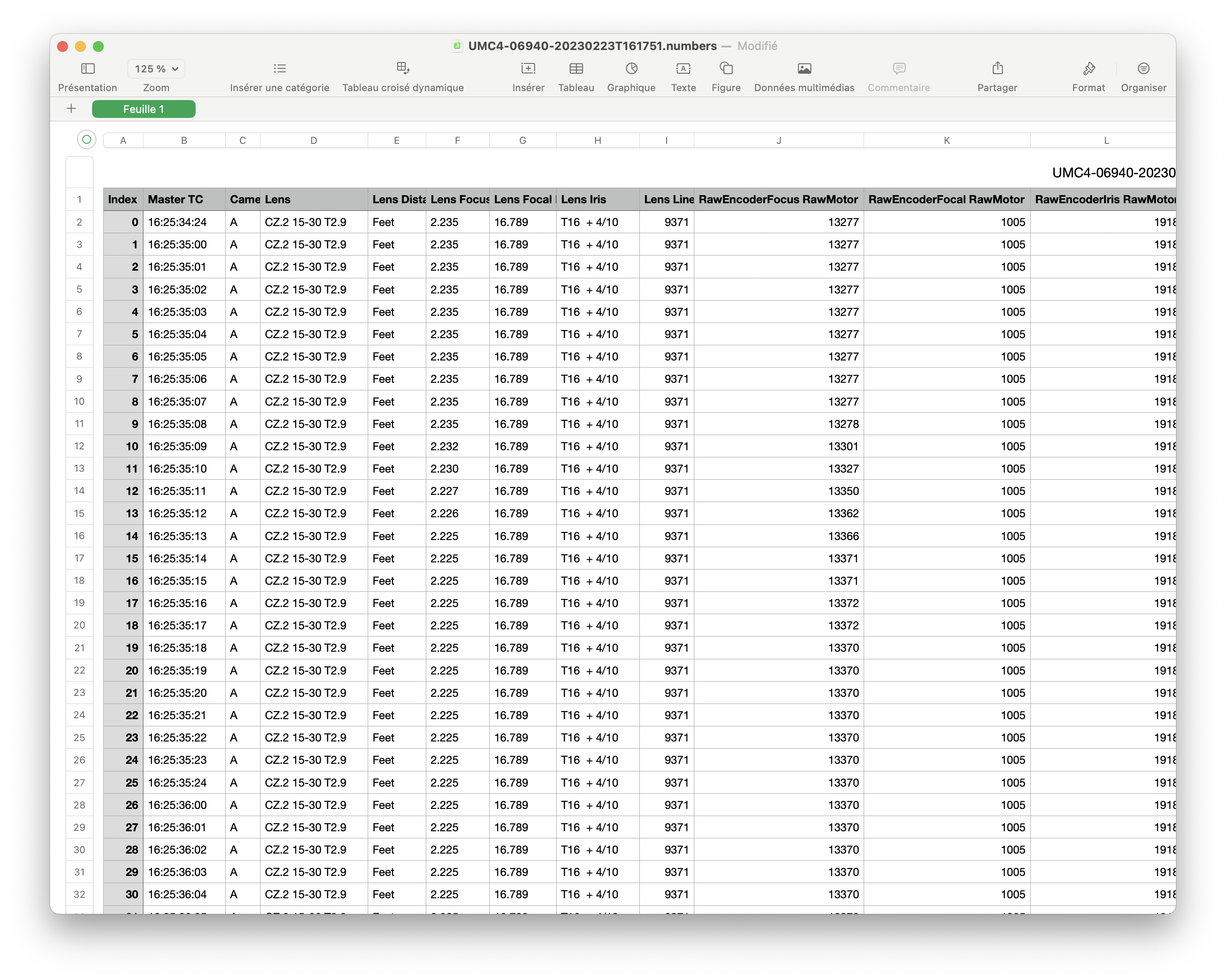

Each configuration giving rise to a shot must be listed, so that we can compare our notes in the field with the metadata actually recorded. We also need to know where the metadata is recorded (embedded into the image files, in third-party files or even off-camera log files), how it is extracted and what software is used to process it.

The layout of the trackers should ideally be 3D scanned with a scale reference. This can be done simply using an iPhone (from iPhone 12 pro upwards) or a recent iPad via a photogrammetry application.

With an indicative schedule that should highlight the relevance of using optical metadata, we also know that we will have to adapt to the equipment actually available on the day of the shoot at our partners’ facilities.

We are working on the basis of the following cases :

– 1. Rack focus on tripod : execute a focus switch between two distant markers, a fast (2s) and a slow (1s) version, to test sampling dynamics.

– 2. Variable Focal length (for zooms) on tripod : perform a focal length changeover between two distant markers, a fast version (2s) and a slow version (10s), to test sampling dynamics.

– 3. Variable aperture on tripod : perform an aperture change, a fast version (2s) and a slow version (10s), to test sampling dynamics.

– 4. Focus pull and handheld camera : perform a range shot with lateral movement to create parallax. Combine with panning to frame all the 7 markers. The focus puller will lock onto the depth of the middle row.

– 5 Change of focal length (zoom) plus rack focus with a handheld camera : execute a range shot with lateral movement to create parallax and switch focal length. Combine with panning to frame all markers. The focus puller will target the middle row.

LDS, /i and eXtended

These three names refer to optical metadata technologies :

– LDS is the name of the Lens Data System designed by Arri

– /i is the protocol designed by Cooke

– eXtended Data, derived from the system /i is the protocol developed by Zeiss

In concrete terms, the 3 systems extract key data useful for post-production, and by extension for VFX. Some of this data is easily readable in post-production editing or color-grading software, while others are more specific and less accessible.

The basic data are :

– Name, type and focal length of lens used

– Focus distance

– Aperture

– Depth of field

– Hyperfocal distance

The /i2 protocol adds gyro metadata and the /i3 adds shading and deformation metadata (up to 285 fps).

The eXtended Data protocol adds deformation data as well as shading data based precisely on aperture focal length and focus distance.

The LDS-2 is announced as having a higher data rate than the LDS-1, enabling greater precision at high frame rates (for slow-motion shots, for example). Arri is also opening up the use of the LDS-2 protocol to other manufacturers.

Cameras, optics and dedicated devices

For our choice of camera bodies and optics, we’ve endeavored to determine classic shooting configurations, the idea being to integrate modern optics equipped with metadata as well as vintage optics.

For our first workshop, on February 23, 2023, we have a Sony Venice 2, an Arri Alexa 35 and even a Phantom Flex. On the optics side, we’ll be using Zeiss Supreme Primes, Cooke SF+FF and Zeiss Compact Zooms.

As well as two essential little devices : an Arri UMC-4 (Universal Motor Controller) and Ambient’s ACN-LP Lockit+.

To be effective in our case, the Arri UMC-4 allows metadata to be retrieved via the connection with the FIZ motors (Focus, Iris, Zoom) and recorded on an SD card. To correctly interpret the metadata it receives, the UMC-4 must be loaded with a LDA (Lens Data Archive), a file enabling each lens listed (by brand, model, focal length) to be recognized by the camera, so that the metadata sent by the lens is correctly translated and written to the right place in the file for use in post-production.

The Lockit+ not only feeds timecode to the camera (and UMC-4), but also retrieves metadata directly from the lens if it is plugged with a Lemo cable (as is the case with /i and eXtended lenses).

It should be remembered here that, depending on the brand and model, optical metadata passes either through the PL mount contacts or through a specific Lemo socket, and sometimes both ways. However, it would be necessary to determine whether the same information passes through the mount and the Lemo socket – bearing in mind that in the case of the mount, the information is intended to be wrapped into the metadata of image files, and in the case of the Lemo, the information is intended for devices outside the camera body and is stored in sidecar files).

The UMC-4 box is well known to camera assistants, who use optical data to help with focus work : thanks to LDAs, a dedicated device can actually display depths of field calculated using the focal length, aperture and focus point of a given lens.

The ACN LP Lockit+ not only retrieves lens metadata, but also links this data to the timecode. This means you can record which lens settings are made at which times, over the entire duration of the recorded shot. This makes it possible to access the physical data used to produce a given shot. A gold mine for post-production and VFX.

A word of clarification, however : the latest version of Lockit+ only integrates Cooke’s /i3 extended metadata recording. There was talk of Zeiss compatibility in the future, but no certainty yet. Thanks to Yannic Hieber @ambient.de , who kindly entrusted us with a prototype for our work.

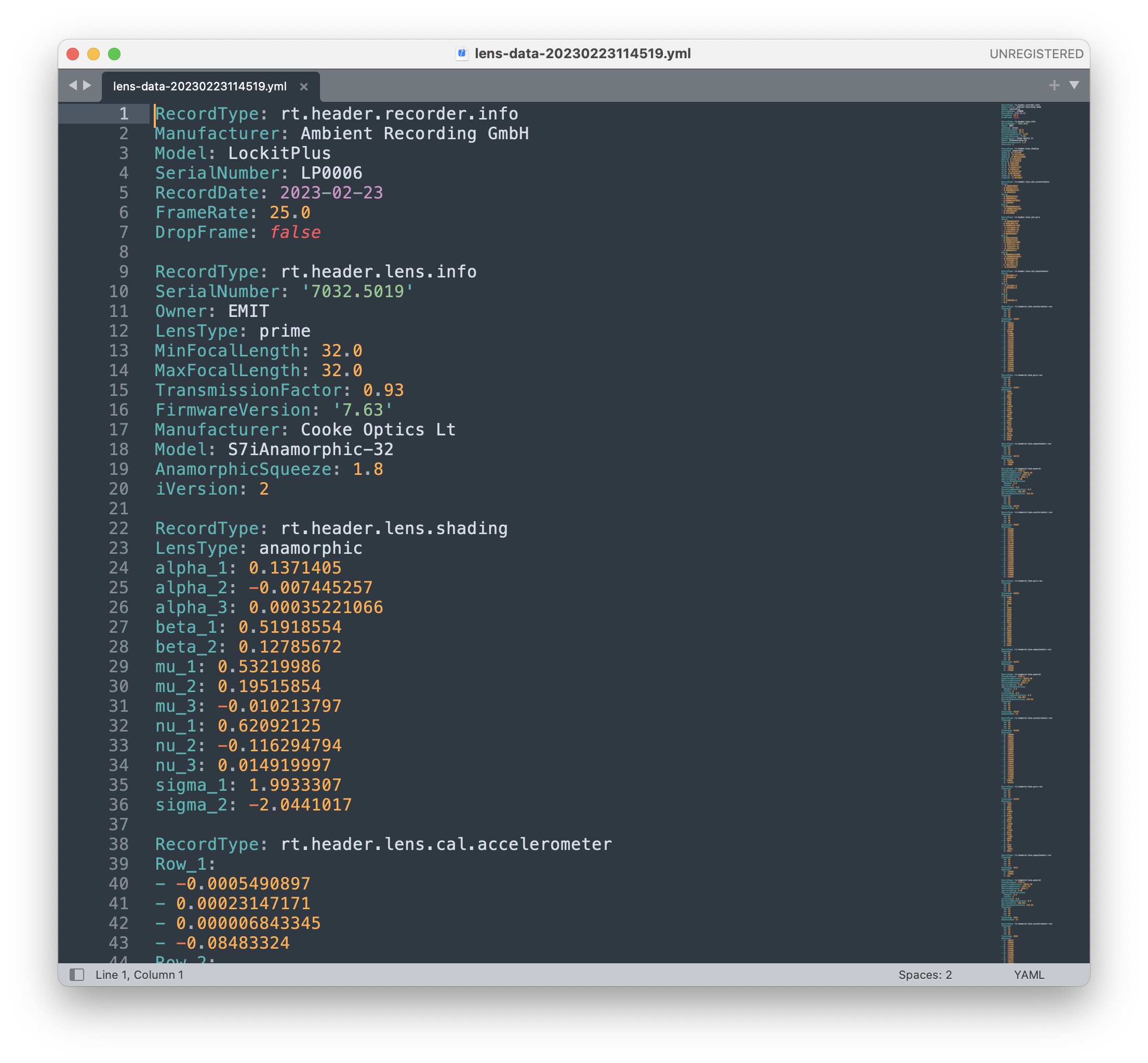

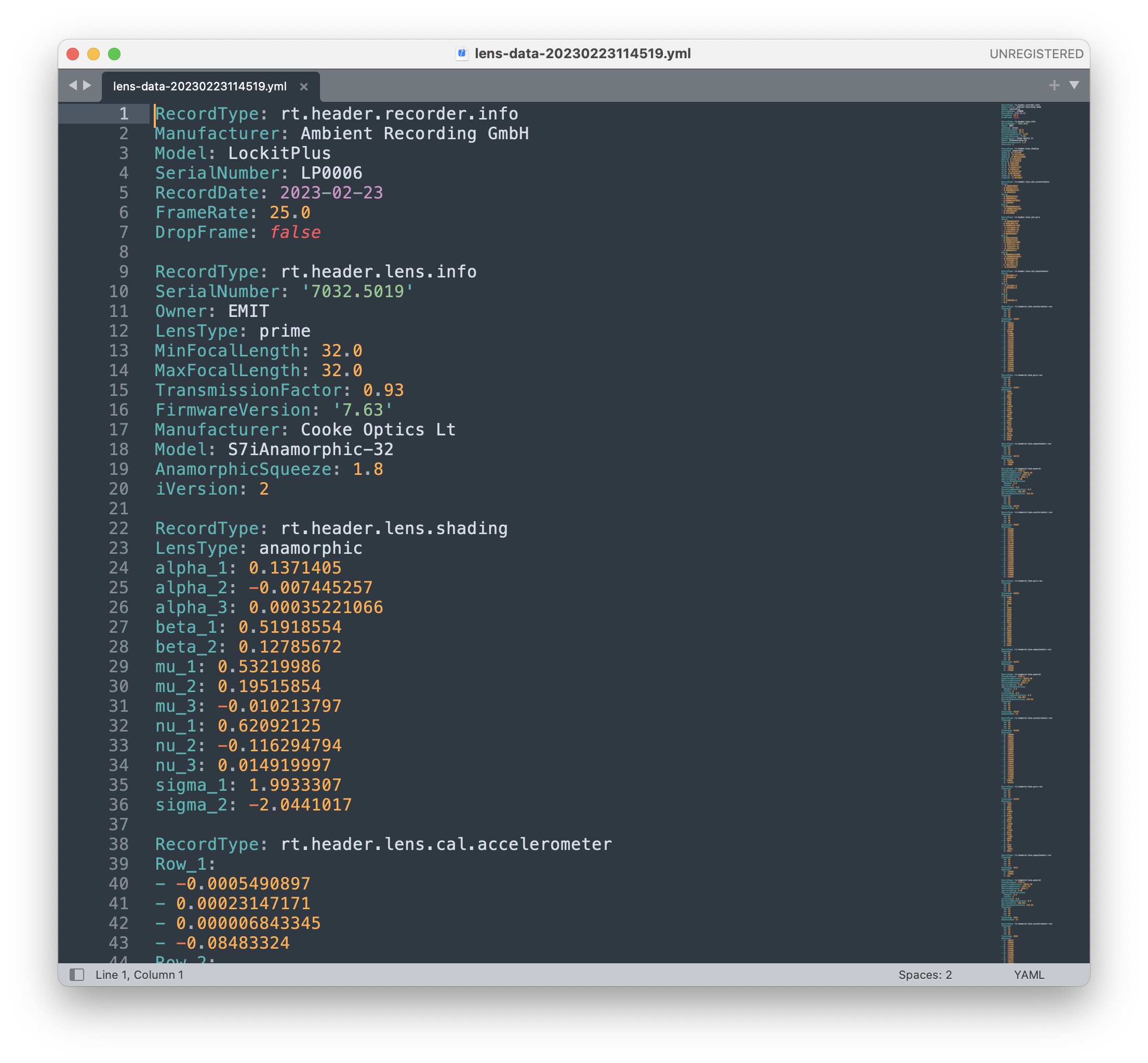

This enabled us to record raw data from Cooke lenses with synchronized timecode on SD card in YAML format. For Zeiss configurations, the box simply injected the timecode into the camera and into the electronic slate that was framed. We then had to rely on the cameras’ ability to record metadata via the PL mount.

The challenges for us are severalfold :

– How to record the metadata generated by the optics for a given camera and series of optics : is this data included in the image files or in other files generated by the cameras ? Can/should this data be retrieved by third-party devices ?

– Identify what equipment we need to record this data : boxes, cables, mounts… And whether our French rental companies are able to supply them !

– Determine which software can display the recorded data and make use of it.

– Affirm the need for a technical dialogue between shooting and post-production to ensure that the metadata generated during shooting is not lost during post-production, given that this metadata is of little interest to editing but is very useful for VFX.

Remember that we’re focusing on shooting conditions outside the virtual studio.

First contacts

We first connect a Venice 2 equipped with a 32mm Cooke SF-FF series, with the following settings : X-OCN XT, 8.6K – 3:2 with 1.8x anamorphic de-Squeeze, shutter at 90.0°, project frame rate @25fps.

We chose a 90° shutter to avoid excessive motion blur. This shutter speed is often recommended to facilitate the integration of VFX when using green and blue screens, the motion blur being added later to make the rendering of movement more natural to the eye.

The set and the positioning of the trackers in relation to the camera are measured by hand and also scanned in 3D with photogrammetry software (here Polycam, on iPad or iPhone), the idea being to ensure that the distances recorded by the metadata are in line with actual distance measurements.

A round object is positioned at the boundary of the frame to monitor any distortion and potentially calculate a precise distortion coefficient.

We plug in the Lockit+, the red cable sends the TC to the cam, the blue cable recovers the optical data sent by the optics.

Once our computer is connected via wifi to the Lockit+ box, the data are displayed, enabling us to record each shot and all the metadata transmitted by the optics. The data are recorded by the box in a text file is in YAML format (between XML and CSV), as plain text (open source), but it’s raw data. We’ll then need to find the software that can parse this data and merge it with the metadata of an image file, based on the timecode and on the classic data describing the shot (name, date, band number, etc.).

We then switched to a Zeiss Supreme Prime 15mm, adapting the camera settings as we went spherical. However, we keep the shutter at 90°. We load the LDA into the UMC-4 and record the metadata from the motors, to be compared with the metadata recorded via the Lockit+ box.

Note that with the Venice 2, we have to manually trigger the recording on the UMC-4.

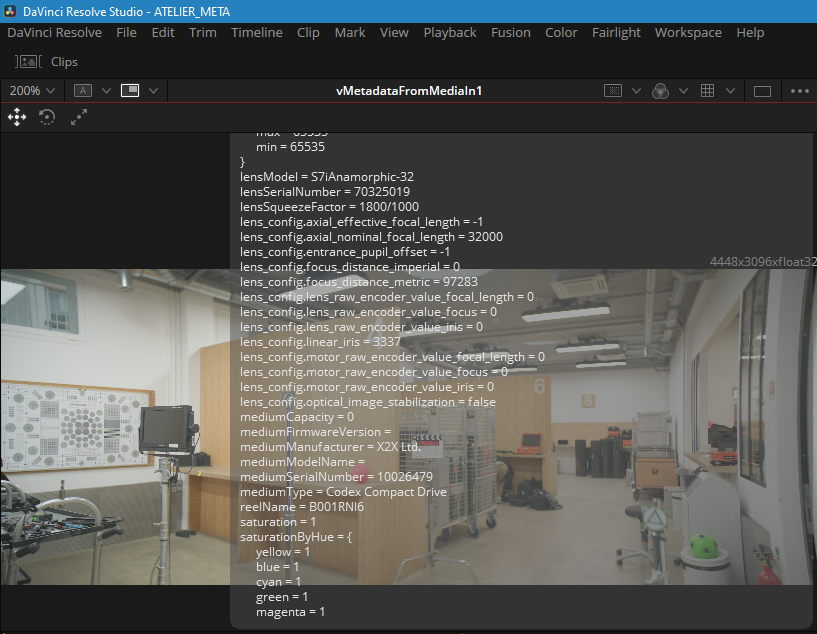

To get an initial idea of the fruits of our efforts, we compare the metadata actually recorded with the same rush from the Venice.

With Sony’s Raw Viewer, we can see the type of lens, the sensor, the f-stop and the focus distance in meters, but this is a fixed point (at the very beginning of the shot ?), with no dynamic data (i.e. no changes over the duration of the shot).

With Sony’s Raw Viewer, we can see the type of lens, the sensor, the f-stop and the focus distance in meters, but this is a fixed point (at the very beginning of the shot ?), with no dynamic data (i.e. no changes over the duration of the shot).

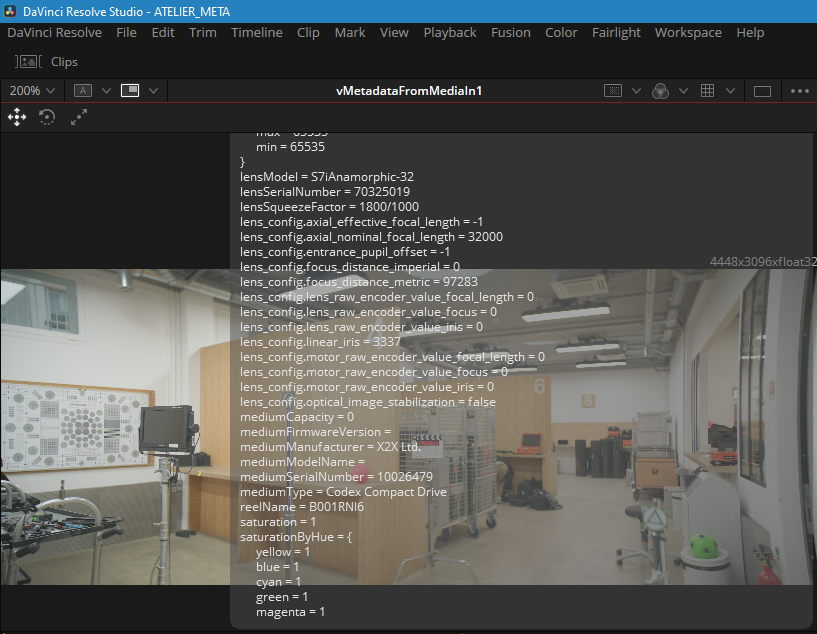

In Da Vinci Resolve, you have to dig a little deeper!

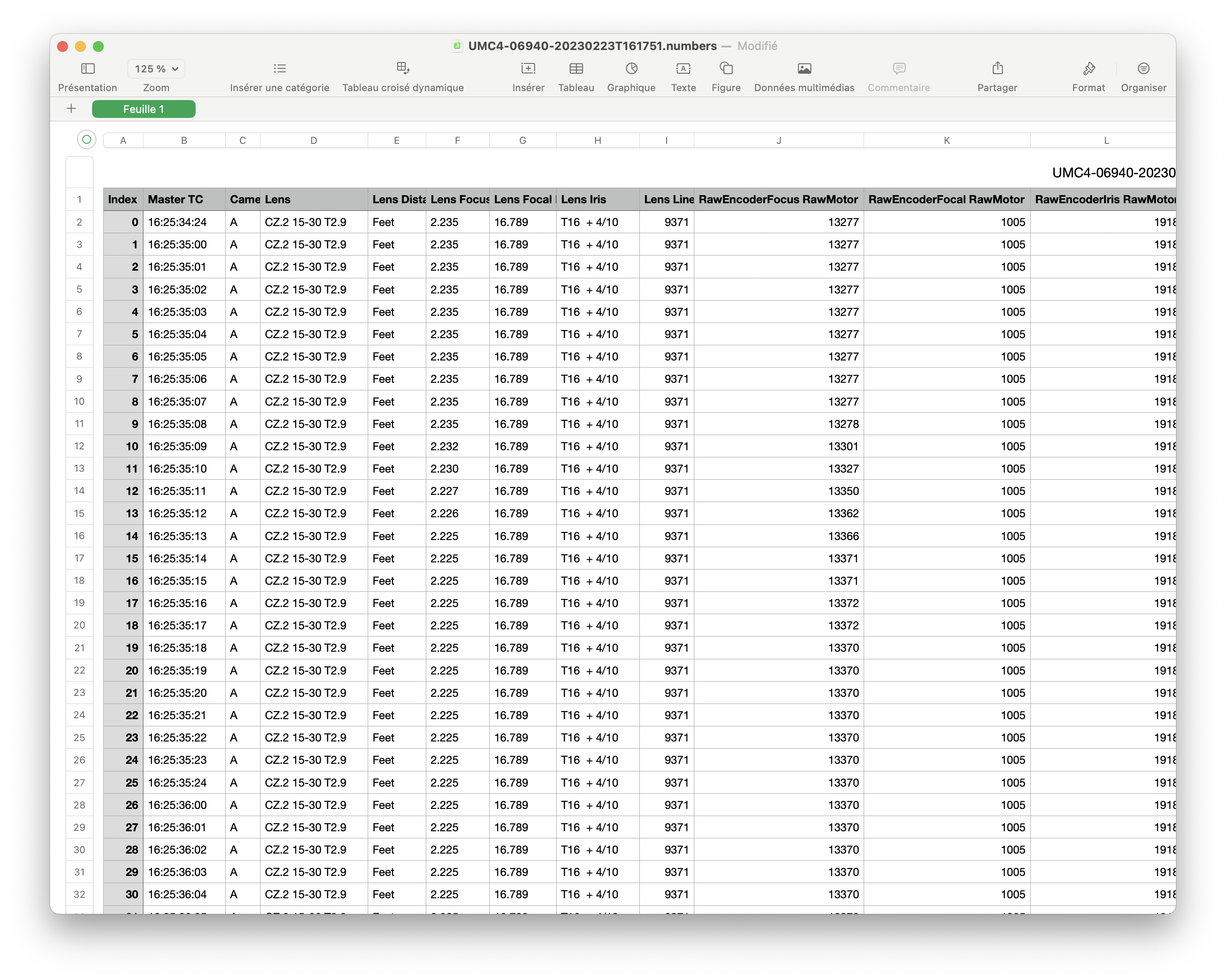

With Lockit+, the columns are labelled as follows : Lens general, Lens shading, Gyro, Focus distance, Hyper focal, Min distance, Max distance. Depending on the focal length, we could also add vignetting and distortion data.

We then switch to a Zeiss 15-30mm Compact Zoom, to add dynamic data to the focal length values, which are displayed like the other values in real time on the Lockit+ box. The Lockit is not compatible with Zeiss.

We decided to switch to Alexa Mini LF (4.5K recording) with the 32mm Cooke SF+FF, then the 50mm Zeiss Supreme Prime. Data is still transmitted via the Lemo cable to the Lockit+ box.

Switching to Arri means that data recording on the UMC-4’s SD card starts automatically as soon as recording is initiated on the Mini LF (auto trigger). This is a pleasant surprise, as it’s much more comfortable.

To round off the day, and because the camera is right in front of us, we put the 32mm Cooke SF+FF on the Phantom Flex. The camera is rather rudimentary, and a problem soon arises : how do you inject timecode into a time base of 1000 frames per second? And what’s the point of writing FIZ data to an SD card if no timecode synchronizes the camera simultaneously with the UMC-4 box ?

We’re sure there could be further tests, but that would be another chapter, too specific for the purpose of our current work.

In a forthcoming article, we’ll see how LD can be generated with the use of non smart lenses. To be continued !

Thanks to Valentine Lequet, co-president of UCO, Sara Cornu, Larry Rochefort and Teddy Ajolet, camera assistant. Many thanks to the PhotoCineRent team for their warm welcome and involvement.

____

🇬🇧 English version

Following on the first programmatic article in this miniseries dedicated to optical metadata, we’d like to take you on a detailed dive into those few days dedicated to harvesting optical metadata.

In many cases of conventional production, it seems obvious to us that the presence of optical metadata associated with dailies would enable a more efficient approach to post-production, for corrections to distortion and vignetting, to stabilize a shot, to facilitate the integration of plates in the case of green and blue backgrounds… When in the case of virtual production, optical metadata is a necessity! It is undoubtedly the advent of virtual production that has prompted several manufacturers to speed up the development of optical metadata. And not only lens manufacturers are concerned! After all, metadata goes through cameras and other accessories. So we’ve taken advantage of our network of UCO partners to tackle this sprawling subject. Thank you to Cooke, Zeiss, Arri, Sony, Blackmagic and all the rental companies that welcomed us for this challenge.

For the first session of our study, at PhotoCineRent , we’re putting to the test the protocol that seemed relevant to us. This is a somewhat experimental protocol, in the sense that to our knowledge there is no precedent for it, as it may be the case for comparisons of the visual rendering of optics or the way digital sensors handle high and low light. In fact, our study about optical metadata is limited to considering the capabilities and data transmission flow of optics, and not their aesthetic qualities and specificities. A kind of new exercise for us!

A protocol

Of course, we were also interested in how to transmit metadata from the shoot to our post-production colleagues.

We approached the Compagnie Générale des Cadeaux Visuels (thanks to Françoise Noyon, Pierre Billet and Vanessa Lafaille), through the intermediary of the CST , which we associate with our work, so that they could share their experience on the subject of our study and establish a viable protocol , ie one that corresponds to concrete practical cases. We start from the presupposition that, in theory, each shot should have 7 track points in every image. This is the minimum number needed to calculate the position in space of the virtual camera reconstructed using 3D tracking. We therefore manufacture track targets to impose this minimum.

As regards fixed focal lengths, we don’t think it’s necessary to fit all the lenses in the series, but rather one or two short ones, which are more prone to distortion, and a 32mm, 35mm or 50mm reference lens (depending on the optical series and sensor size). As far as zooms are concerned, priority will be given to short zooms (assuming that the long zooms in the series have the same electronic specificities as the short zooms).

Each configuration giving rise to a shot must be listed, so that we can compare our notes in the field with the metadata actually recorded. We also need to know where the metadata is recorded (embedded into the image files, in third-party files or even off-camera log files), how it is extracted and what software is used to process it. The layout of the trackers should ideally be 3D scanned with a scale reference. This can be done simply using an iPhone (from iPhone 12 pro upwards) or a recent iPad via a photogrammetry application. With an indicative schedule that should highlight the relevance of using optical metadata, we also know that we will have to adapt to the equipment actually available on the day of the shoot at our partners’ facilities.

We are working on the basis of the following cases: – 1. Rack focus on tripod: execute a focus switch between two remote markers, a fast (2s) and a slow (1s) version, to test sampling dynamics. – 2. Variable Focal length (for zooms) on tripod: perform a focal length changeover between two distant markers, a fast version (2s) and a slow version (10s), to test sampling dynamics. – 3. Variable aperture on tripod: perform an aperture change, a fast version (2s) and a slow version (10s), to test sampling dynamics. – 4. Focus pull and handheld camera: perform a range shot with lateral movement to create parallax. Combine with panning to frame all the 7 markers. The focus puller will lock onto the depth of the middle row. – 5 Change of focal length (zoom) plus rack focus with a handheld camera: execute a range shot with lateral movement to create parallax and switch focal length. Combine with panning to frame all markers. The focus puller will target the middle row.

LDS, /i and eXtended

These three names refer to optical metadata technologies: – LDS is the name of the Lens Data System designed by Arri – /i is the protocol designed by Cooke – eXtended Data, derived from /i, is the protocol developed by Zeiss

In concrete terms, the 3 systems extract key data useful for post-production, and by extension for VFX. Some of this data is easily readable in post-production editing or color-grading software, while others are more specific and less accessible.

The basic data are: – Name, type and focal length of lens used – Focus distance – Aperture – Depth of field – Hyperfocal distance

The /i2 protocol adds gyro metadata and the /i3 adds shading and deformation metadata (up to 285 fps). The eXtended Data protocol adds deformation data as well as shading data based precisely on aperture focal length and focus distance. The LDS-2 is announced as having a higher data rate than the LDS-1, enabling greater precision at high frame rates (for slow-motion shots, for example). Arri is also opening up the use of the LDS-2 protocol to other manufacturers.

Cameras, optics and dedicated devices

For our choice of camera bodies and optics, we’ve attempted to determine classic shooting configurations, the idea being to integrate modern optics equipped with metadata as well as vintage optics. For our first workshop, on February 23, 2023, we have a Sony Venice 2, an Arri Alexa 35 and even a Phantom Flex. On the optics side, we’ll be using Zeiss Supreme Primes, Cooke SF+FF and Zeiss Compact Zooms. As well as two essential little devices: an Arri UMC-4 (Universal Motor Controller) and Ambient’s ACN-LP Lockit+ .

To be effective in our case, the Arri UMC-4 allows metadata to be retrieved via the connection with the FIZ motors (Focus, Iris, Zoom) and recorded on an SD card. To correctly interpret the metadata it receives, the UMC-4 must be loaded with a LDA (Lens Data Archive), a file enabling each lens listed (by brand, model, focal length) to be recognized by the camera, so that the metadata sent by the lens is correctly translated and written to the right place in the file for use in post-production.

The Lockit+ not only feeds timecode to the camera (and UMC-4), but also retrieves metadata directly from the lens if it is plugged with a Lemo cable (as is the case with /i and eXtended lenses).

It should be remembered here that, depending on the brand and model, optical metadata passes either through the PL mount contacts or through a specific Lemo socket, and sometimes both ways. However, it would be necessary to determine whether the same information passes through the mount and the Lemo socket – bearing in mind that in the case of the mount, the information is intended to be wrapped into the metadata of image files, and in the case of the Lemo, the information is intended for devices outside the camera body and is stored in sidecar files).

The UMC-4 box is well known to camera assistants, who use optical data to help with focus work: thanks to LDAs, a dedicated device can actually display depths of field calculated using the focal length, aperture and focus point of a given lens. The ACN LP Lockit+ not only retrieves lens metadata, but also links this data to the timecode. This means you can record which lens settings are made at which times, over the entire duration of the recorded shot. This makes it possible to access the physical data used to produce a given shot. A gold mine for post-production and VFX.

A word of clarification, however: the latest version of Lockit+ only integrates Cooke’s /i3 extended metadata recording. There was talk of Zeiss compatibility in the future, but no certainty yet. Thanks to Yannic Hieber @ambient.de, who kindly entrusted us with a prototype for our work. This enabled us to record raw data from Cooke lenses with synchronized timecode on SD card in YAML format. For Zeiss configurations, the box simply injected the timecode into the camera and into the electronic slate that was framed. We then had to rely on the cameras’ ability to record metadata via the PL mount.

The challenges for us are severalfold: – How to record the metadata generated by the optics for a given camera and series of optics: is this data included in the image files or in other files generated by the cameras? Can/should this data be retrieved by third-party devices? – Identify what equipment we need to record this data: boxes, cables, mounts… And whether our French rental companies are able to supply them! – Determine which software can display the recorded data and make use of it. – Affirm the need for a technical dialogue between shooting and post-production to ensure that the metadata generated during shooting is not lost during post-production, given that this metadata is of little interest to editing but is very useful for VFX.

The challenges for us are severalfold: – How to record the metadata generated by the optics for a given camera and series of optics: is this data included in the image files or in other files generated by the cameras? Can/should this data be retrieved by third-party devices? – Identify what equipment we need to record this data: boxes, cables, mounts… And whether our French rental companies are able to supply them! – Determine which software can display the recorded data and make use of it. – Affirm the need for a technical dialogue between shooting and post-production to ensure that the metadata generated during shooting is not lost during post-production, given that this metadata is of little interest to editing but is very useful for VFX.

Remember that we’re focusing on shooting conditions outside the virtual studio.

First contacts

We first connect a Venice 2 equipped with a 32mm Cooke SF-FF series, with the following settings: X-OCN We choose a 90° shutter to avoid excessive motion blur. This shutter speed is often recommended to facilitate the integration of VFX when using green and blue screens, the motion blur being added later to make the rendering of movement more natural to the eye. The set and the positioning of the trackers in relation to the camera are measured by hand and also scanned in 3D with photogrammetry software (here Polycam, on iPad or iPhone), the idea being to ensure that the distances recorded by the metadata are in line with actual distance measurements.

A round object is positioned at the boundary of the frame to monitor any distortion and potentially calculate a precise distortion coefficient. We plug in the Lockit+, the red cable sends the TC to the cam, the blue cable recovers the optical data sent by the optics.

Once our computer is connected via wifi to the Lockit+ box, the data are displayed, enabling us to record each shot and all the metadata transmitted by the optics. The data are recorded by the box in a text file is in YAML format (between XML and CSV), as plain text (open source), but it’s raw data. We’ll then need to find the software that can parse this data and merge it with the metadata of an image file, based on the timecode and on the classic data describing the shot (name, date, band number, etc.).

We then switched to a Zeiss Supreme Prime 15mm, adapting the camera settings as we went spherical. However, we keep the shutter at 90°. We load the LDA into the UMC-4 and record the metadata from the motors, to be compared with the metadata recorded via the Lockit+ box.

Note that with the Venice 2, we have to manually trigger the recording on the UMC-4. To get an initial idea of the fruits of our efforts, we compare the metadata actually recorded with the same rush from the Venice.

With Sony’sRaw Viewer software, we can see the type of lens, the sensor, the f-stop and the focus distance in meters, but this is a fixed point (at the very beginning of the shot?), with no dynamic data (ie no changes over the duration of the shot).

In DaVinci Resolve, you need to dig a little deeper!

With Lockit+, the columns are labeled as follows: Lens general, Lens shading, Gyro, Focus distance, Hyper focal, Min distance, Max distance. Depending on the focal length, we could also add vignetting and distortion data.

We then switch to a Zeiss 15-30mm Compact Zoom, to add dynamic data to the focal length values, which are displayed like the other values in real time on the Lockit+ box. The Lockit is not compatible with Zeiss. We decided to switch to Alexa Mini LF (4.5K recording) with the 32mm Cooke SF+FF, then the 50mm Zeiss Supreme Prime. Data is still transmitted via the Lemo cable to the Lockit+ box. Switching to Arri means that data recording on the UMC-4’s SD card starts automatically as soon as recording is initiated on the Mini LF (auto trigger). This is a pleasant surprise, as it’s much more comfortable.

To round off the day, and because the camera is right in front of us, we put the 32mm Cooke SF+FF on the Phantom Flex. The camera is rather rudimentary, and a problem soon arises: how do you inject timecode into a time base of 1000 frames per second? And what’s the point of writing FIZ data to an SD card if no timecode synchronizes the camera simultaneously with the UMC-4 box?

We’re sure there could be further tests, but that would be another chapter, too specific for the purpose of our current work.

In a future article, we’ll see how LD can be generated with the use of non smart lenses. To be continued!

Thanks to Valentine Lequet, co-president of UCO, Sara Cornu, Larry Rochefort and Teddy Ajolet, camera assistant. Many thanks to the PhotoCineRent team for their warm welcome and involvement.

Also thanks to Françoise Noyon, Yannic Hieber, Vanessa Lafaille and Pierre Billet.